Understanding ARKit Tracking and Detection

Understanding ARKit Tracking and Detection

Understanding ARKit Tracking and Detection

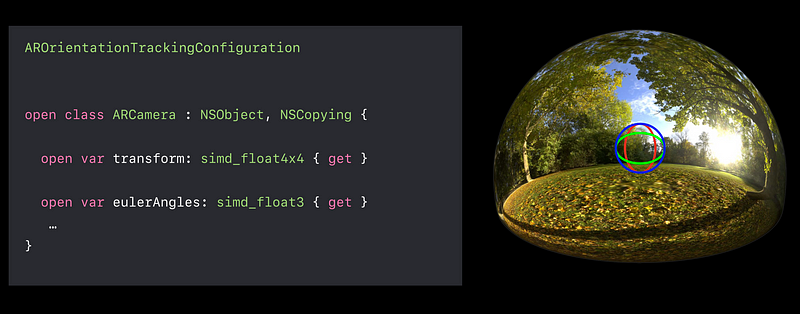

- Tracks orientation only (3 DoF)

- Spherical virtual environment

- Augmentation of far objects

- Not suited for physical world augmentation from different views

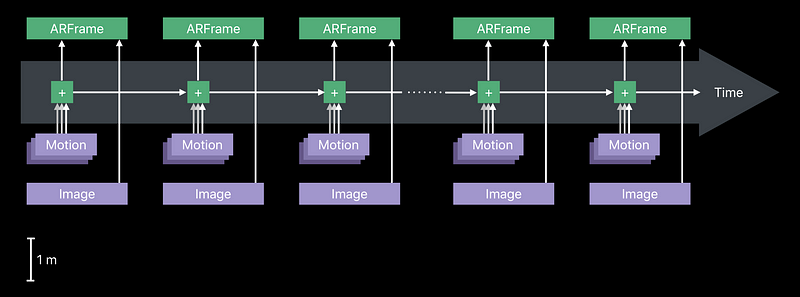

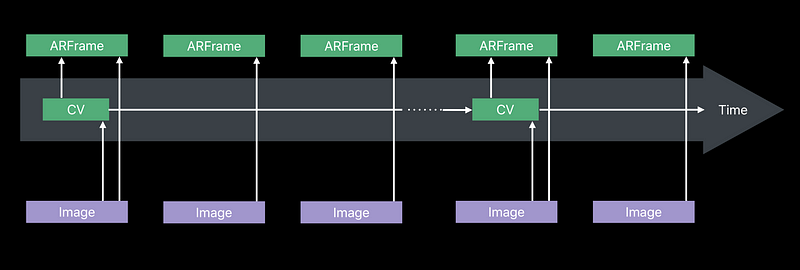

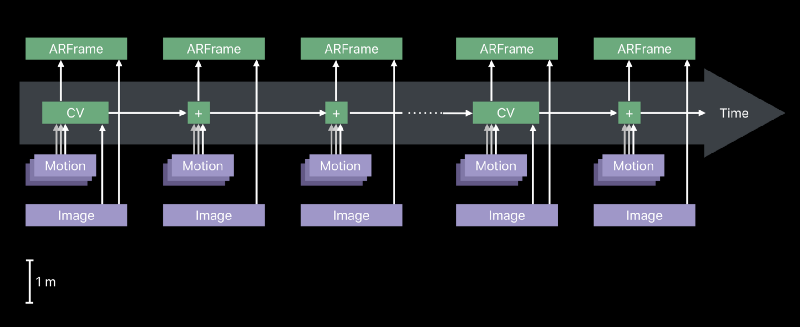

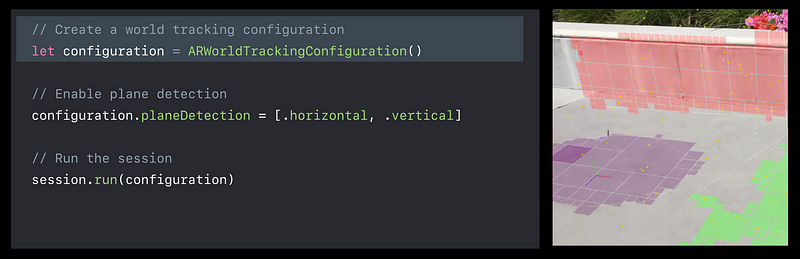

Motion sensorComputer visionMotion data and computer vision- Uninterrupted sensor data

- Textured environments

- Static scenes

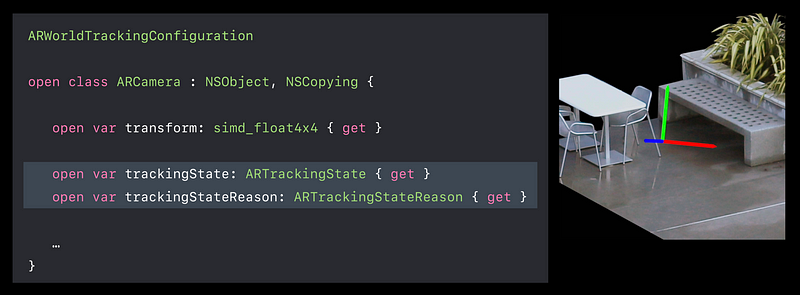

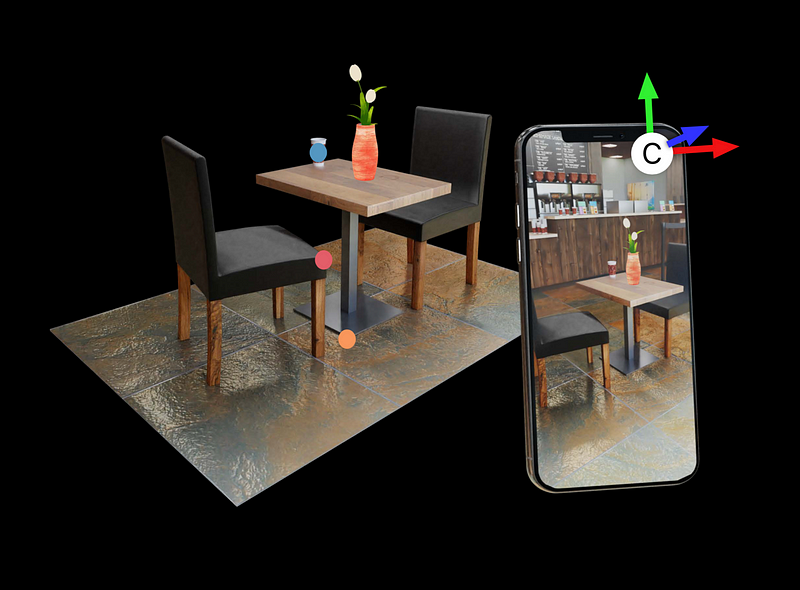

- Tracks orientation and postion (6 DoF)

- Augmentation into your physical world

- World Map

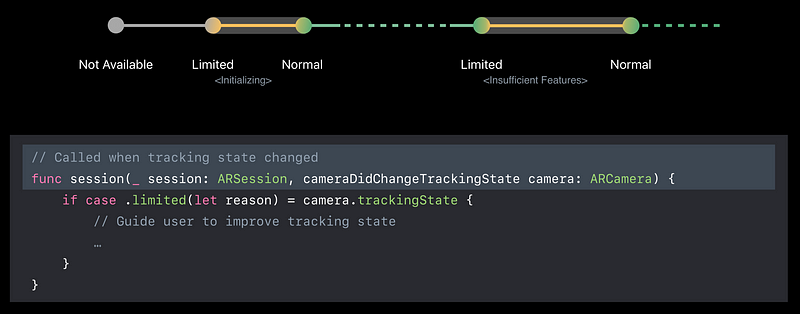

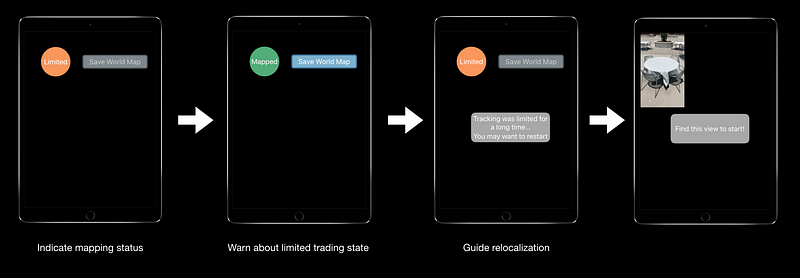

- Guide user to achieve best tracking quality

- Runs on your device only

- Sample ( Building Your First AR Experience )

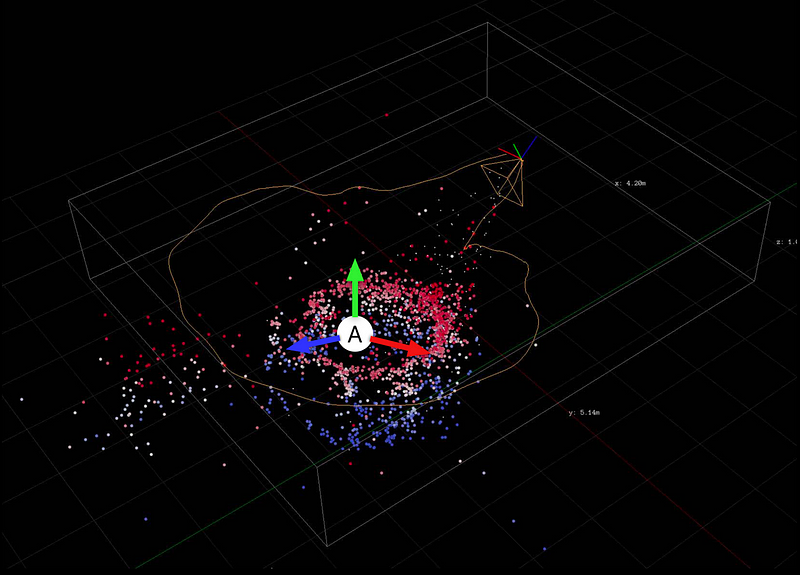

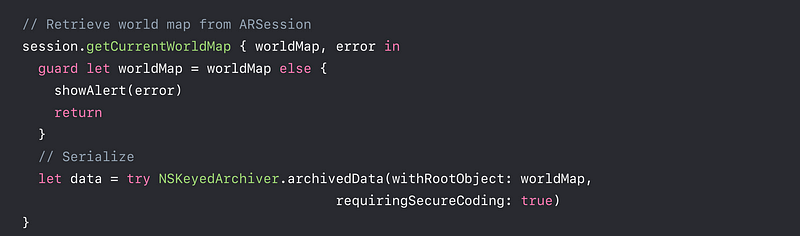

- Acquire a good World Map

- Share the World Map

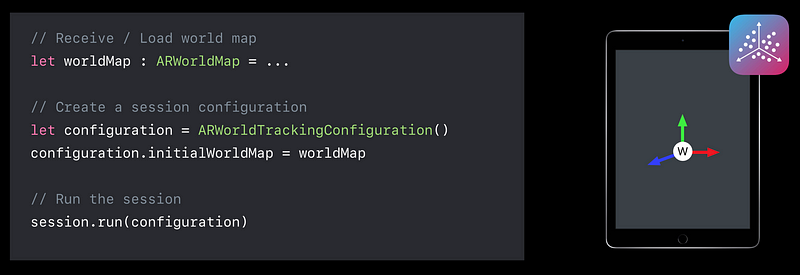

- Relocalized to World Map

- Internal tracking data

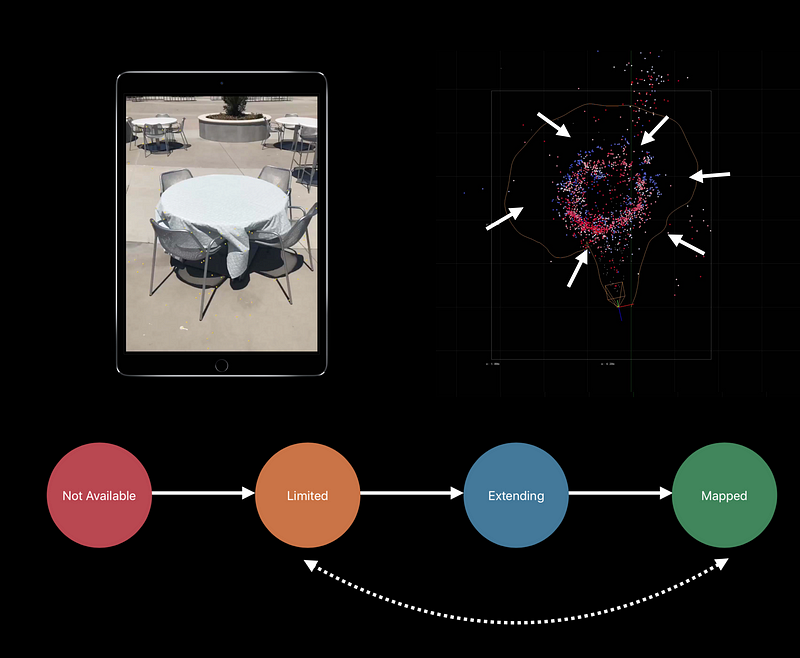

- Map of 3D feature points

- Local appearance - List of named anchors

- Serializable

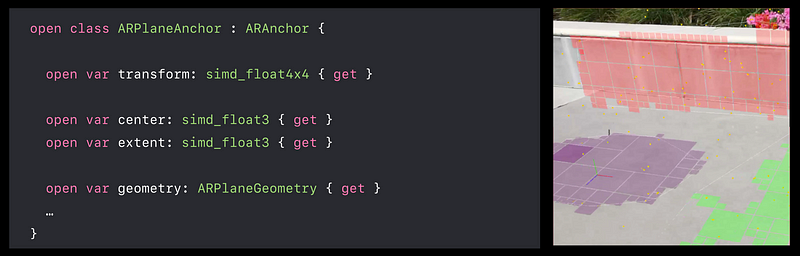

- Dense feature points on map

- Static environment

- Multiple points of view

- World mapping status

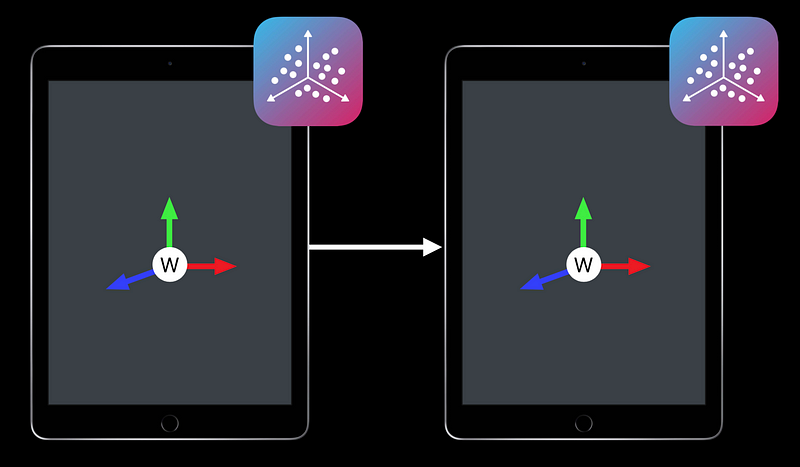

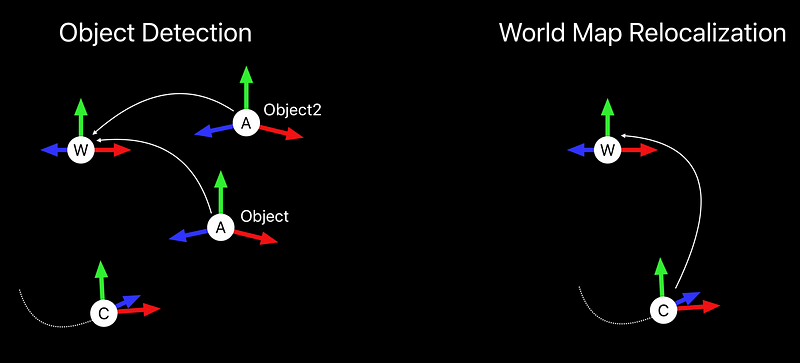

- Before Relocalization

- Tracking state is Limited with reason Relocalizing

- World origin is first camera - After Relocalization

- Tracking state is Normal

- World origin is initial world map

- Only minor changes in environment allowed

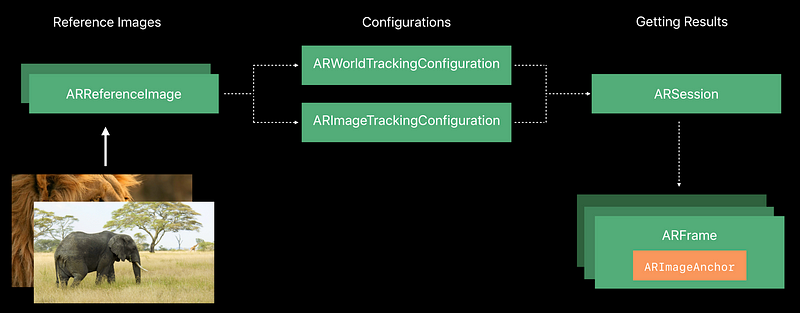

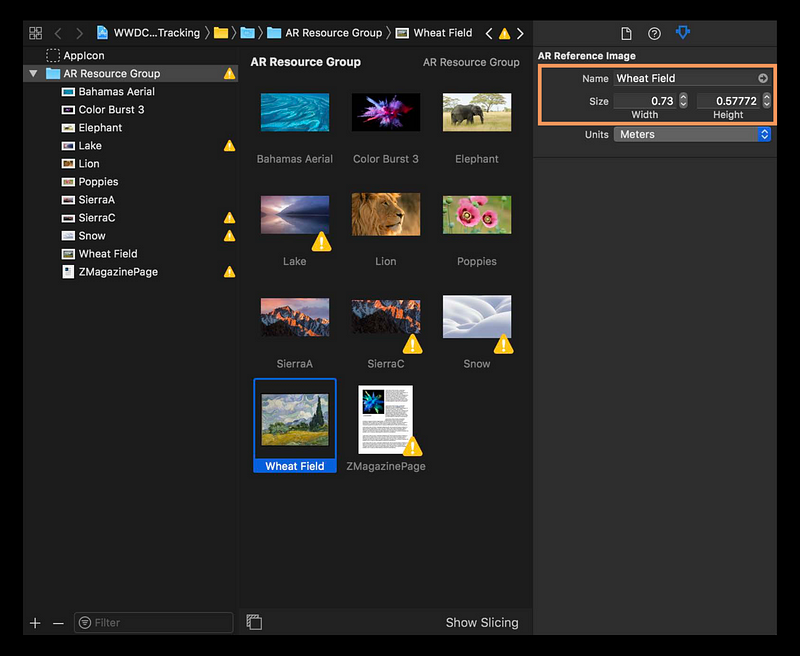

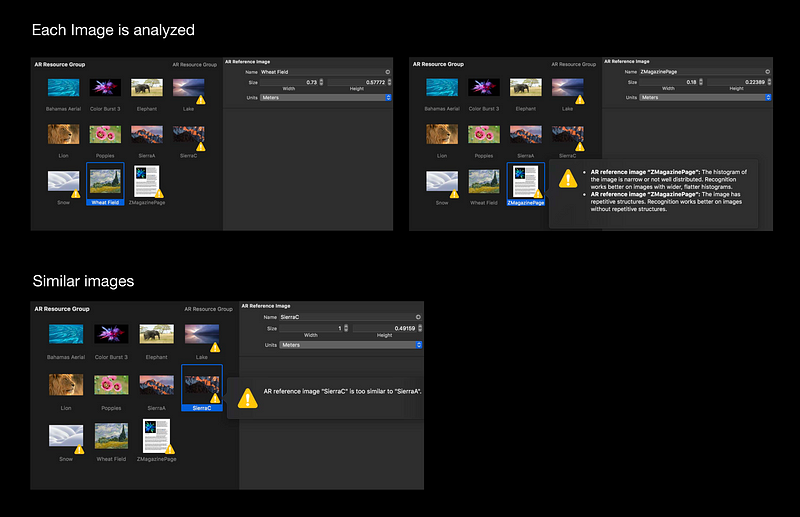

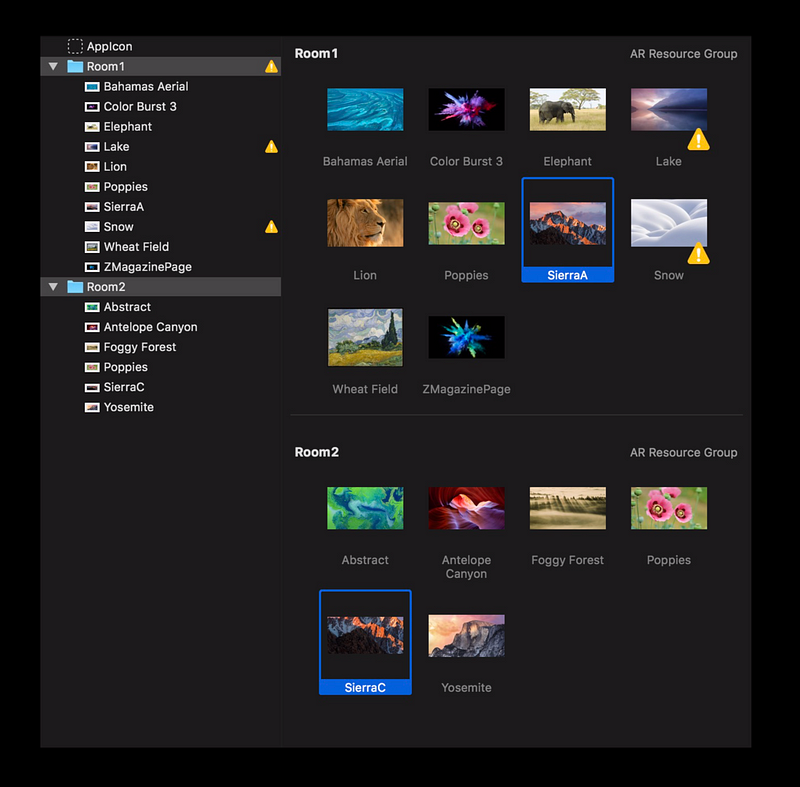

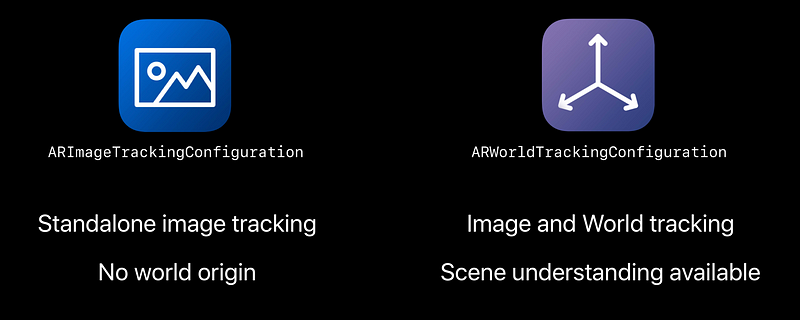

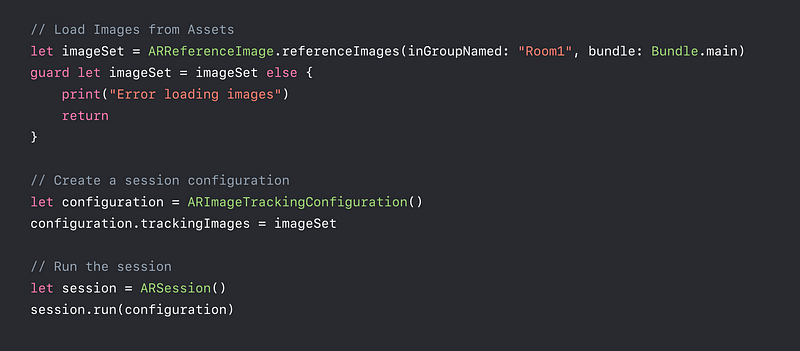

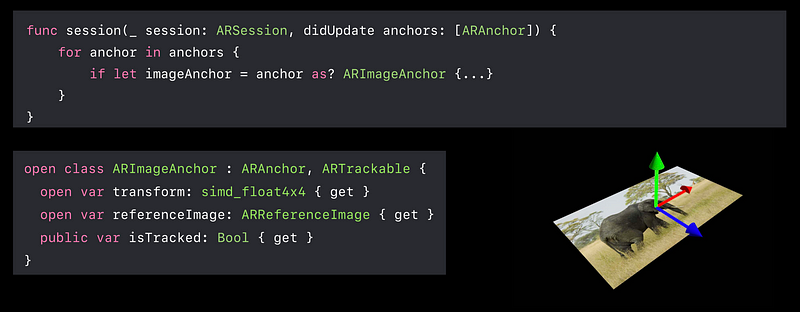

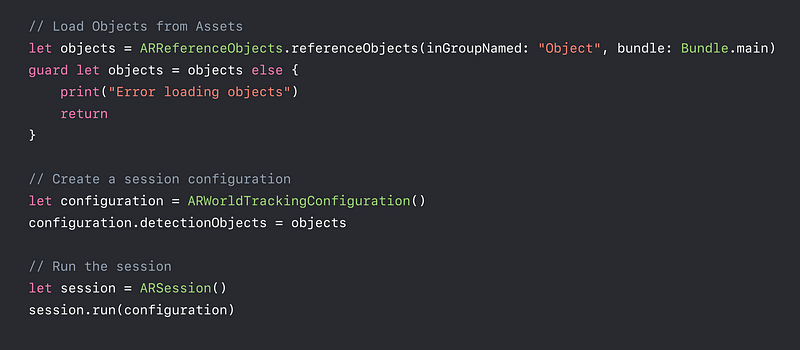

- Create AR Resource Group

- Drag images to be detected

- Set physical dimension for images

- Physical image size must be known

- Allows content to be in physical dimension

- Consistent with world tracking data

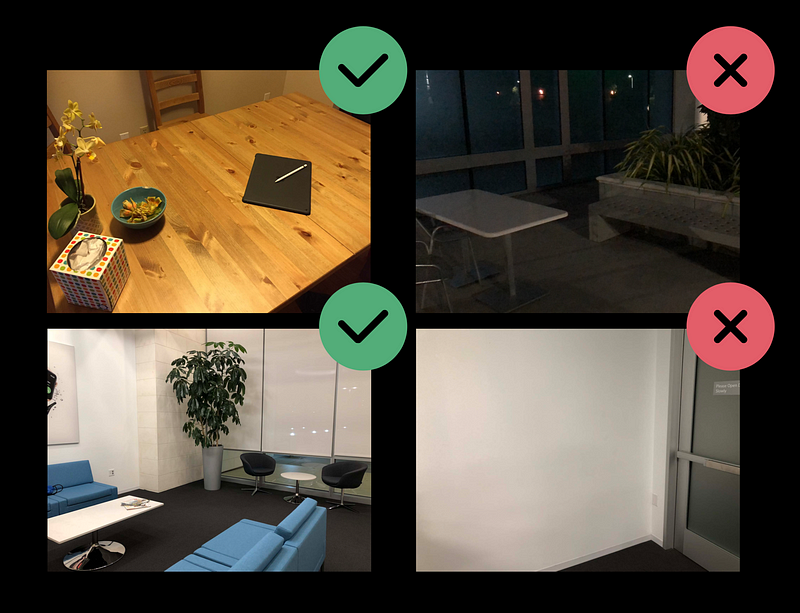

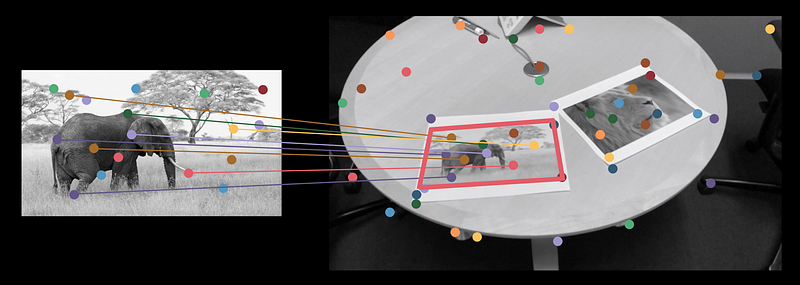

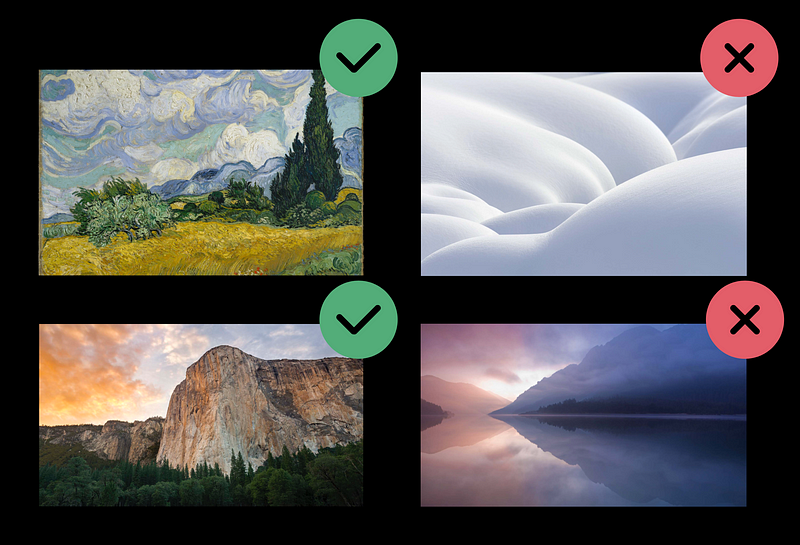

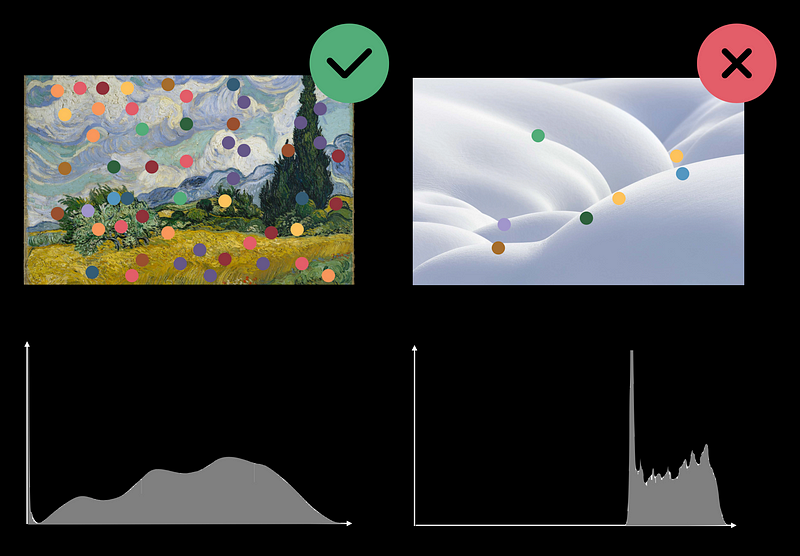

Good Images to Track

- High texture

- High local constrast

- Well distributed histogram

- No repetitive structures

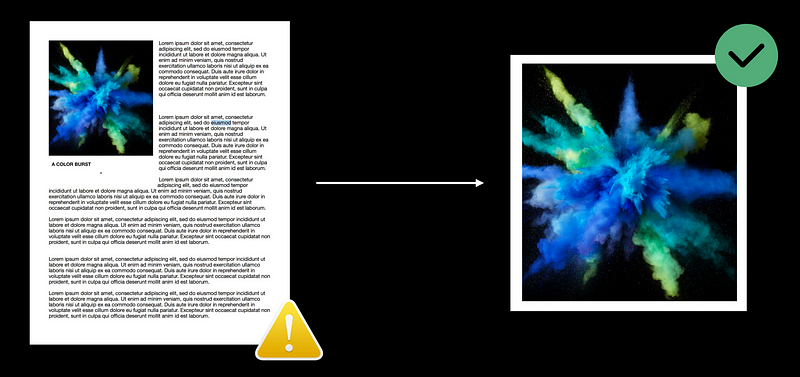

Crop image to its core content

- Allow many more images to be detected

- Max 25 images per group reccomended

- Switch between groups programmatically

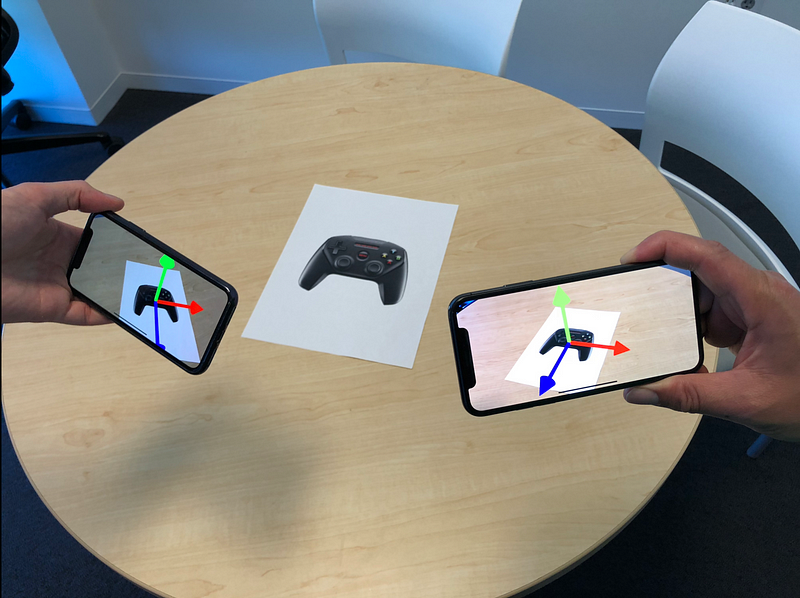

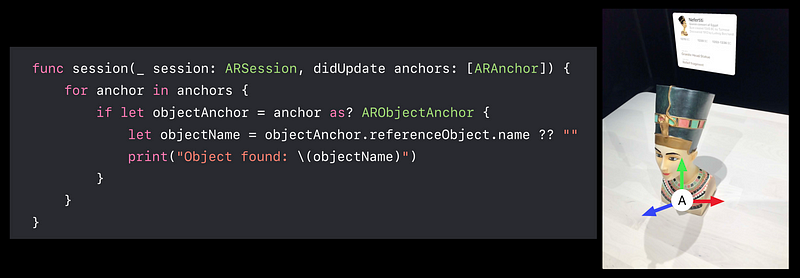

Absolute Coordinate Space for Shared ExperiencesAbsolute Coordinate Space at Precise Location- Similar representation as a world map

- Use “Scanning and Detecting 3D Objects”

- Detection Quality affected by Scanning Quality

Share ARReferenceObject- Rigid objects

- Texture rich

- No Reflective

- No Transparent