Metal for Accelerating Machine Learning

Metal for Accelerating Machine Learning

WWDC 2018

Metal Performance Shaders

GPU-accelerated primitives, optimized for iOS and macOS

- Image processing

- Linear algebra

- Machine learining

- inference

- training (new) - Ray tracing (new)

Training

Inference

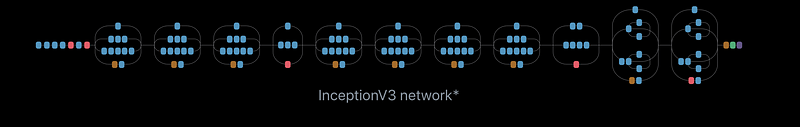

CNN Inference Enhancements

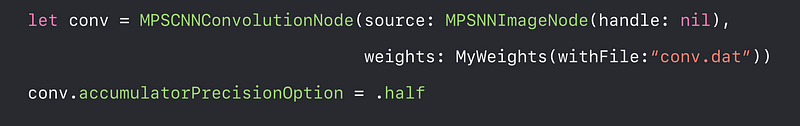

FP16 accumulation

- Available with Apple A11 Bionic GPU for

- Convolution

- Convolution transpose - Sufficient precision for commonly used neural networks

- Delivers better performance than FP32

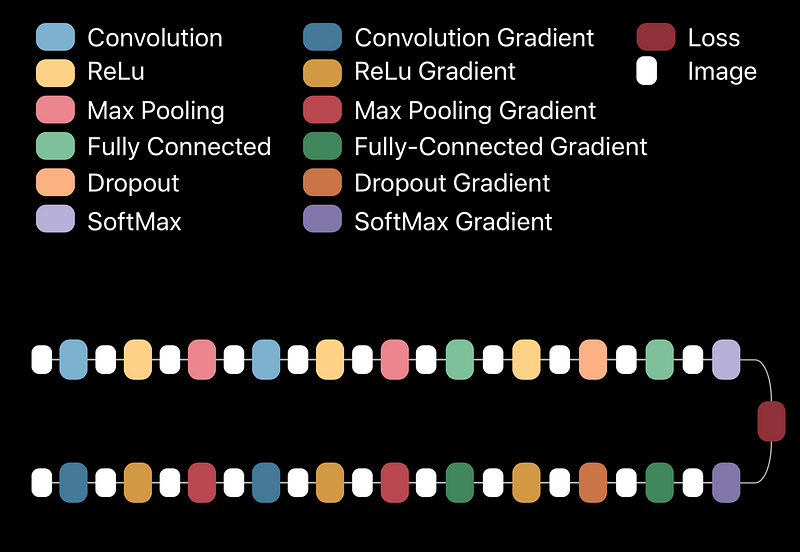

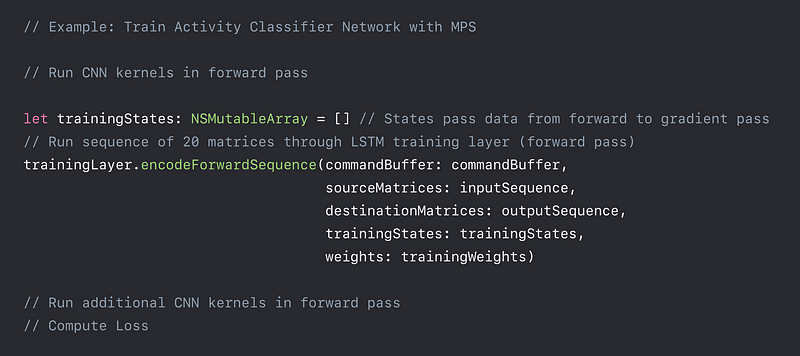

CNN Training

Training

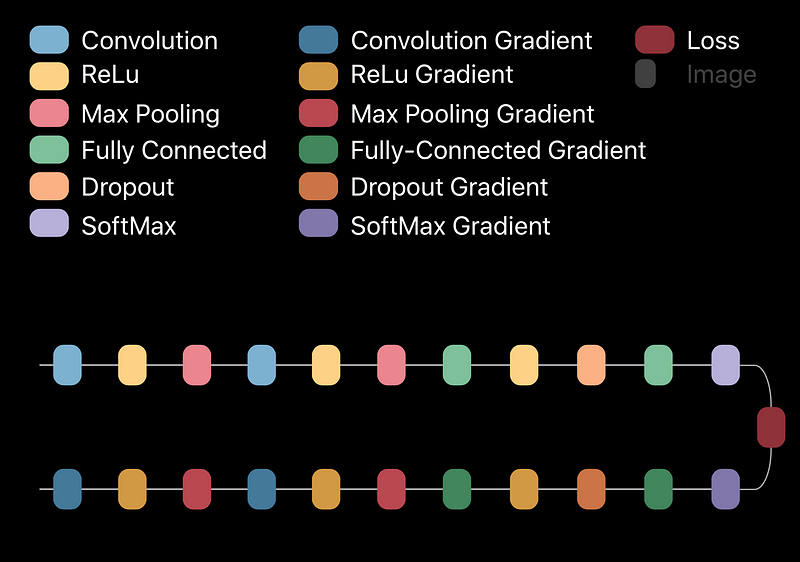

Forward Pass

Loss computation

Gradient pass

Weight update

Iterate

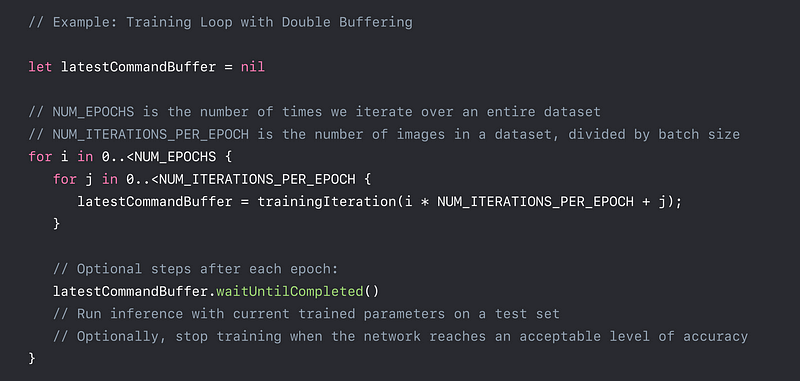

- Forward pass → Loss computation → Gradient pass → Weight update

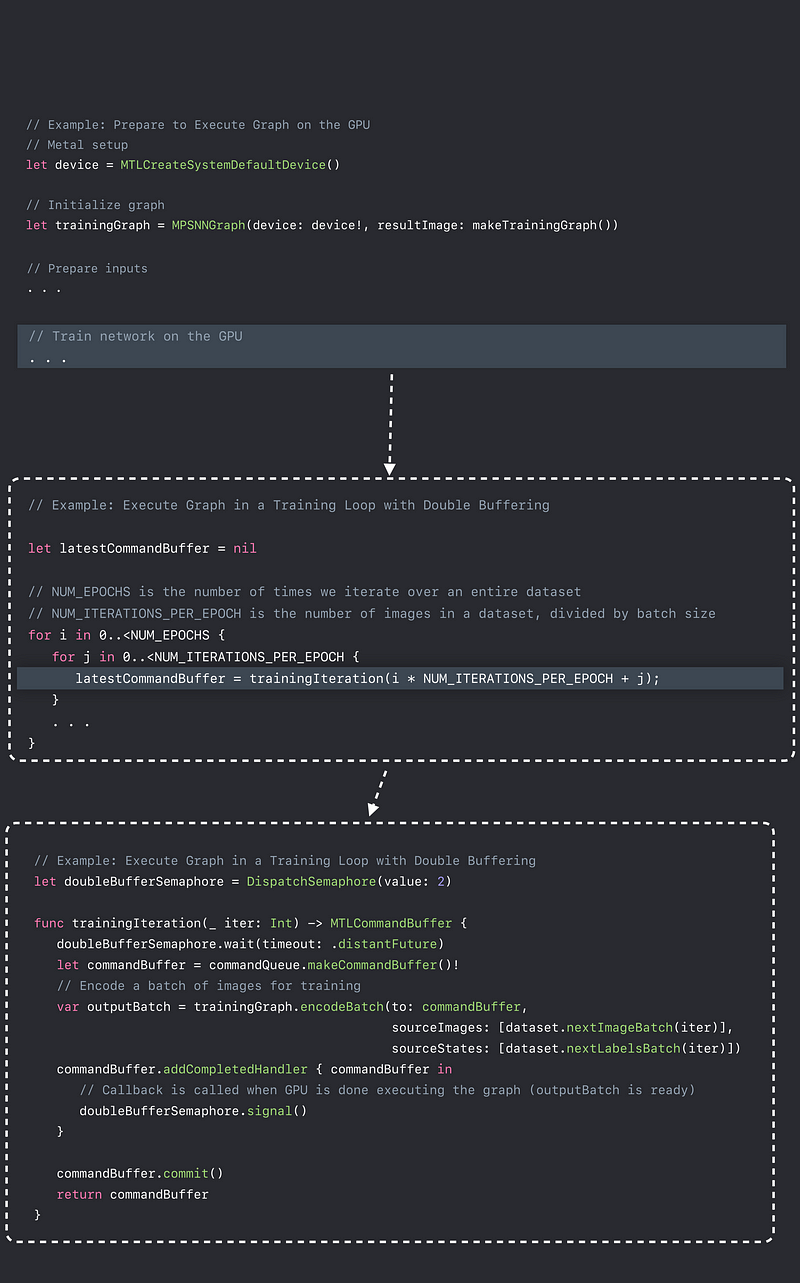

Training a Neural Network with MPS

- Create training graph

- Prepare inputs

- Specify wights

- Execute graph (Graph updates wights)

- Complete training process

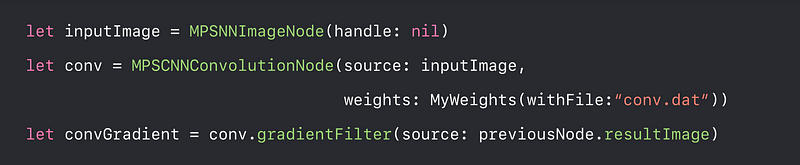

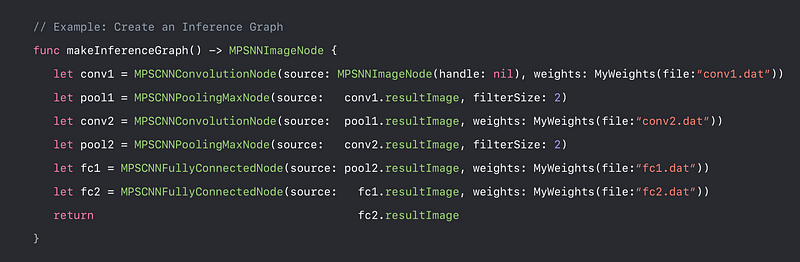

Create Training Graph

- Describe neural network using graph API

- Image nodes — Data

- Filter nodes — Operations

Create an Inference Graph

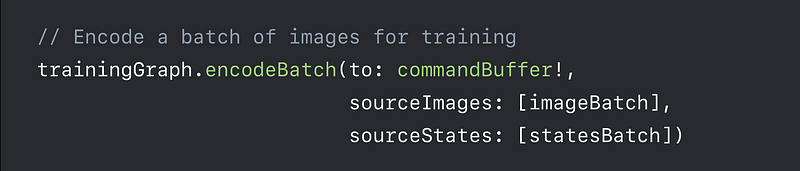

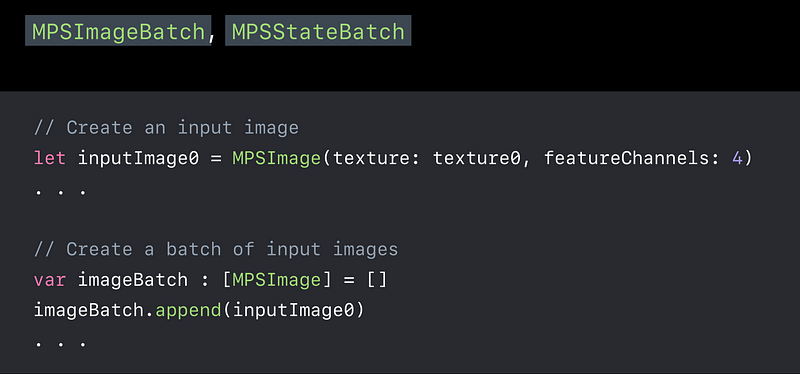

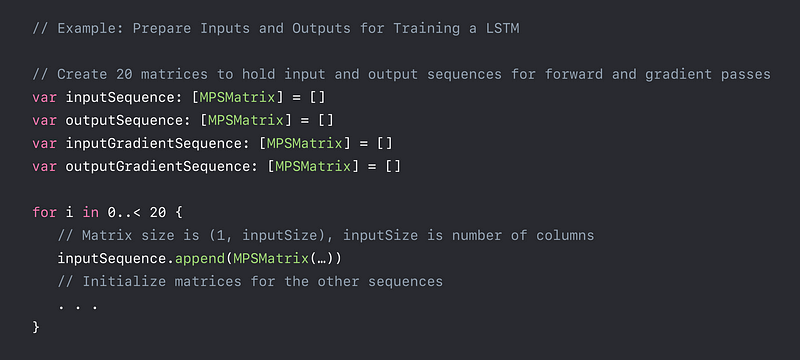

Prepare Inputs

- Inputs to the graph

- Batch of source images

- Batch of source states

Batches

- Batches are arrays of images or states

States

MPSStatepasses state of forward node to gradient node- Graph manages all states

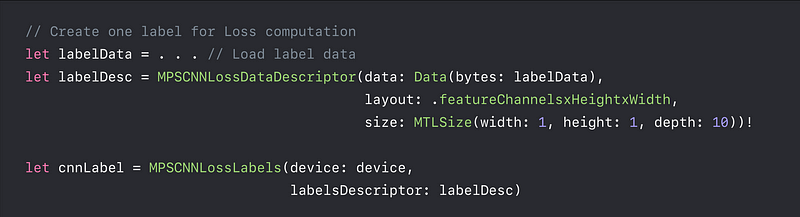

Loss Labels

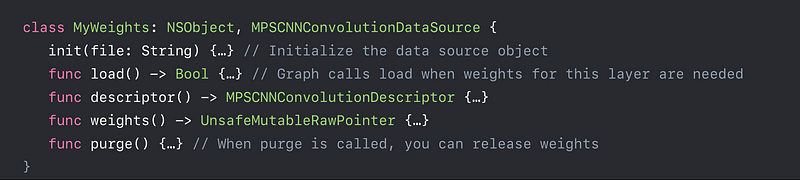

Data Source Providers

- Convolution

- Fully Connected

- Batch normalization

- Instance normalization

- Just-in-time loading and purging of weights data

- Minimize memory footprint

Execute graph

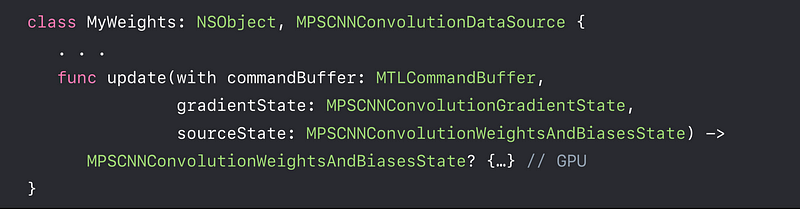

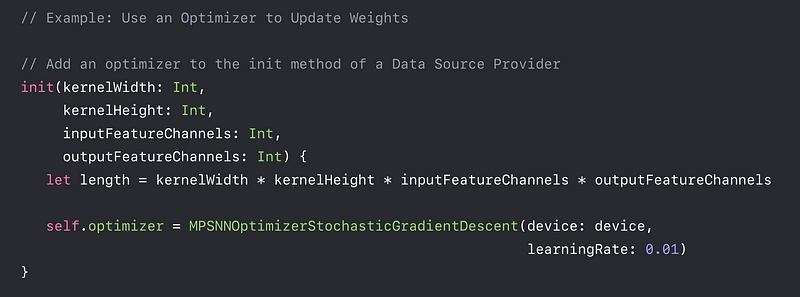

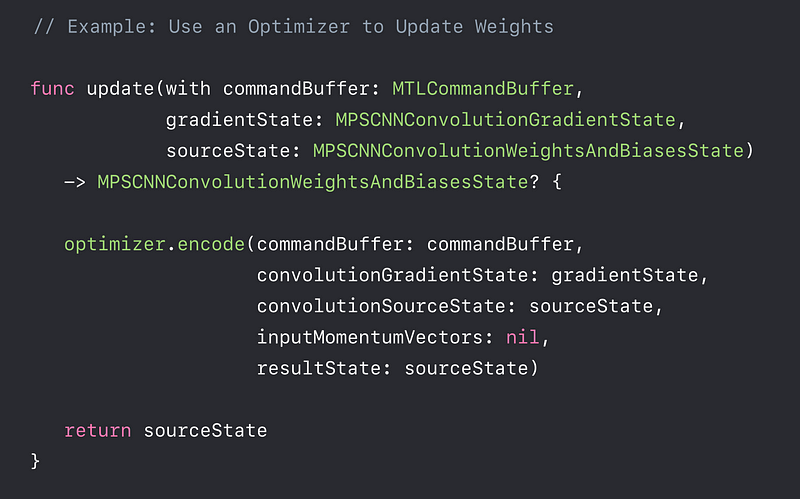

Updating Weights

- Implement optional update method on Data Source Provider

- Graph calls update method automatically

Optimizer

- Describe how to take update step on training parameters

- Used in update method of Data Source Provider

- Variants

-MPSNNOptimizerAdam

-MPSNOptimizerStochasticGradientDescent

-MPSNNOptimizerRMSProp - Custom

Complete training process

Demo

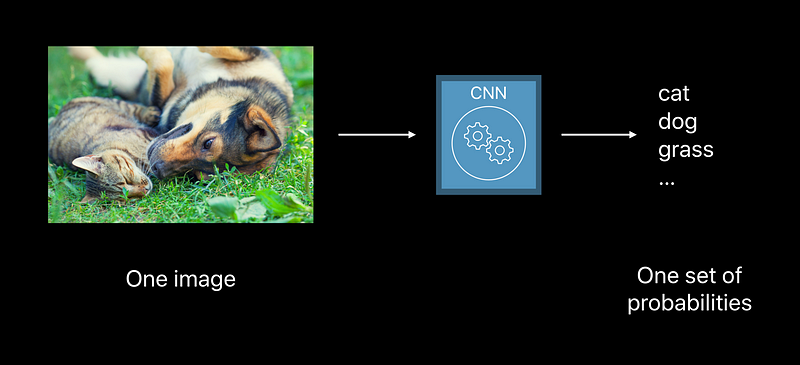

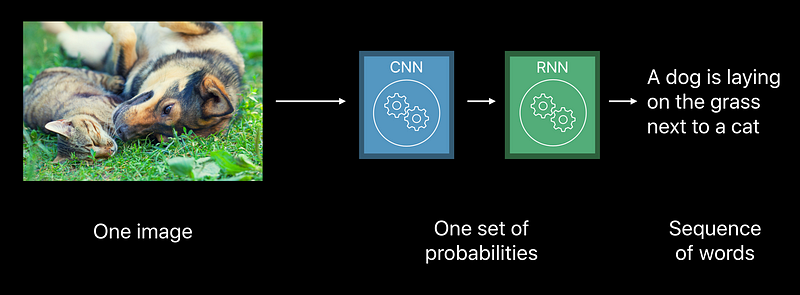

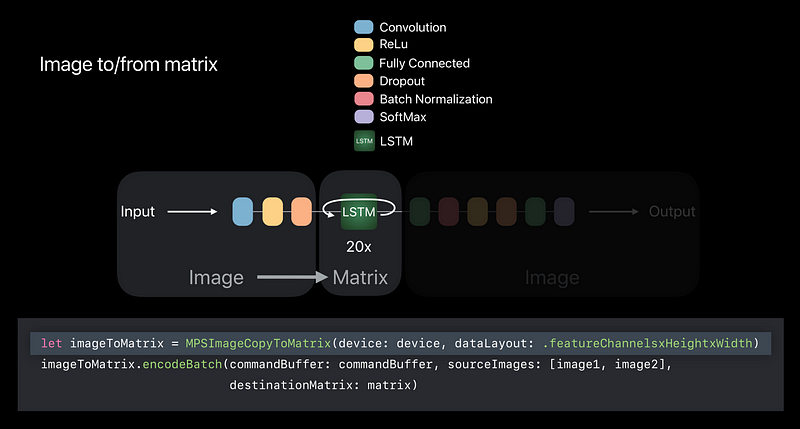

CNN

1 to 1

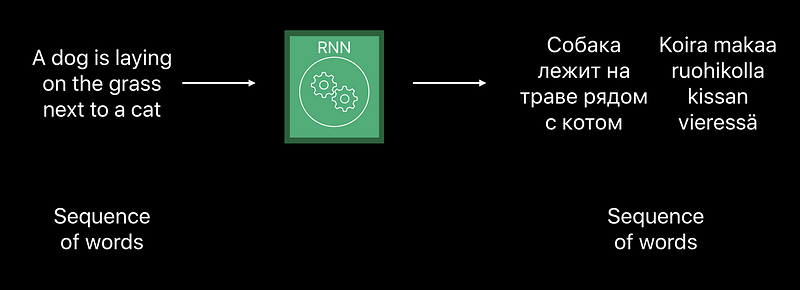

RNN

- 1 to Many

- Many to Many

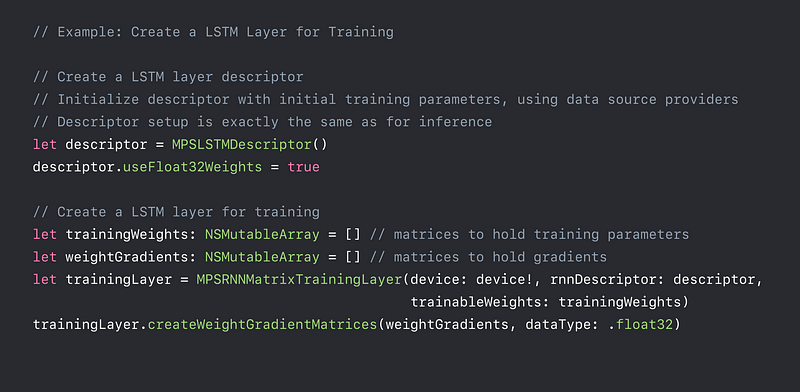

Recurrent Neural Networks

Variants for inference and training (new)

- Single Gate

- Long Short-Term Memory (LSTM)

- Gated Recurrent Unit (GRU)

- Minimally Gated Unit (MGU)

Activity Classifier

Inference

Training

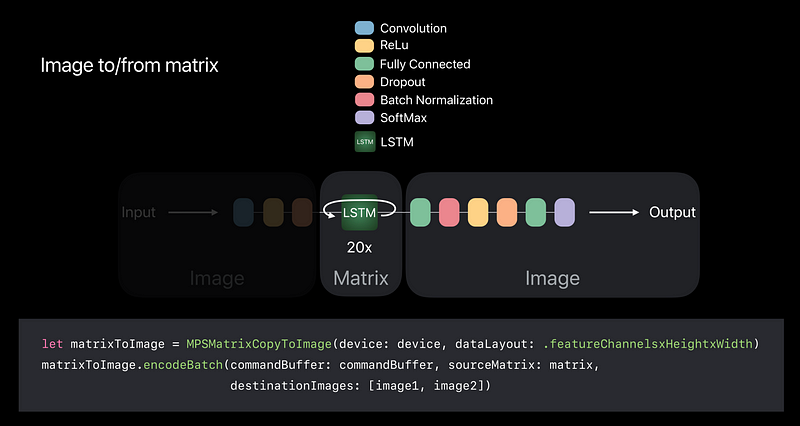

Data Converters

Demo

Object classification training using TensorFlow with MPS