Creating Photo and Video Effects Using Depth

Creating Photo and Video Effects Using Depth

WWDC 2018

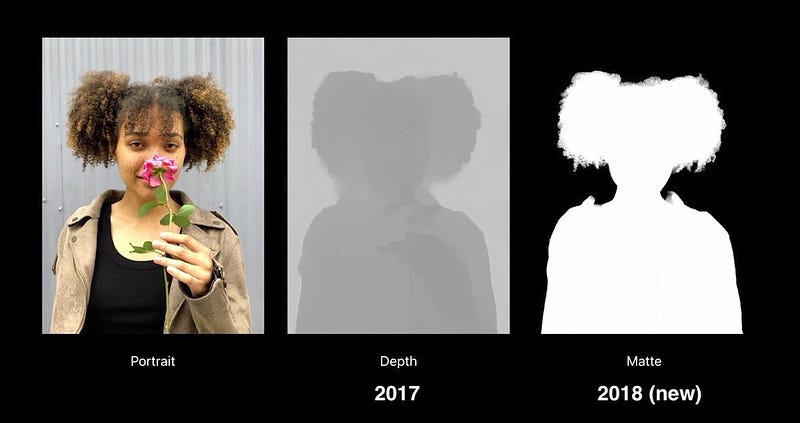

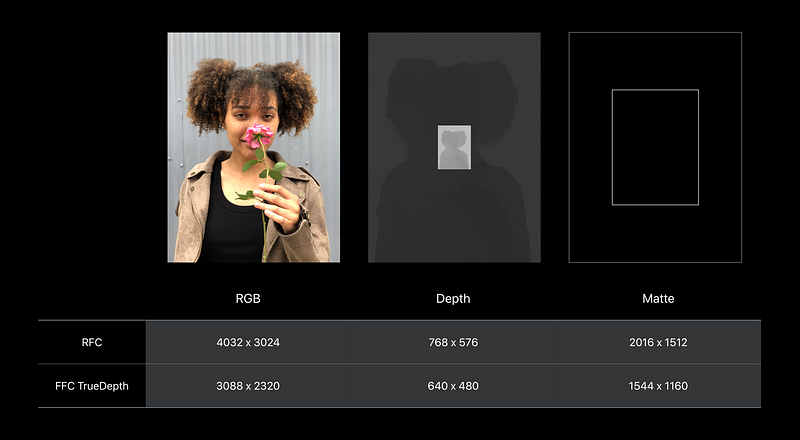

Portrait Matte

- iOS 12

- Front and rear

- Portrait

- People

- Linear

- No guarantee

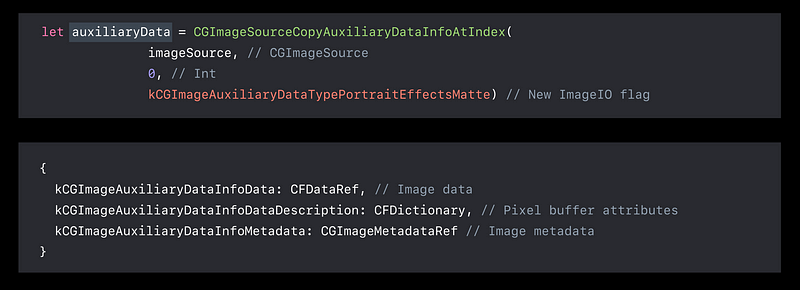

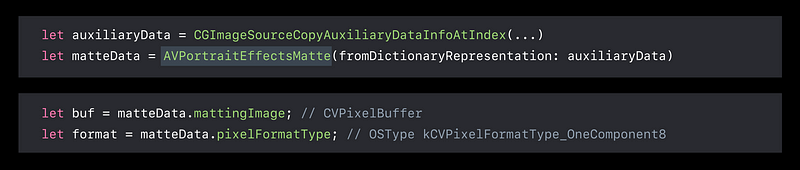

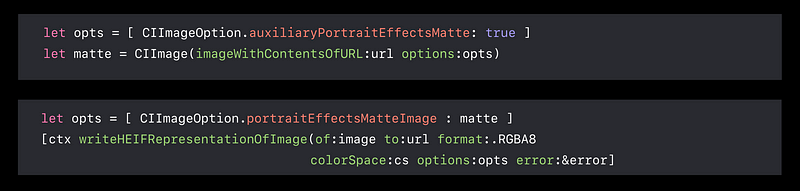

Loading

ImageIO

AVPortraitEffectsMatte

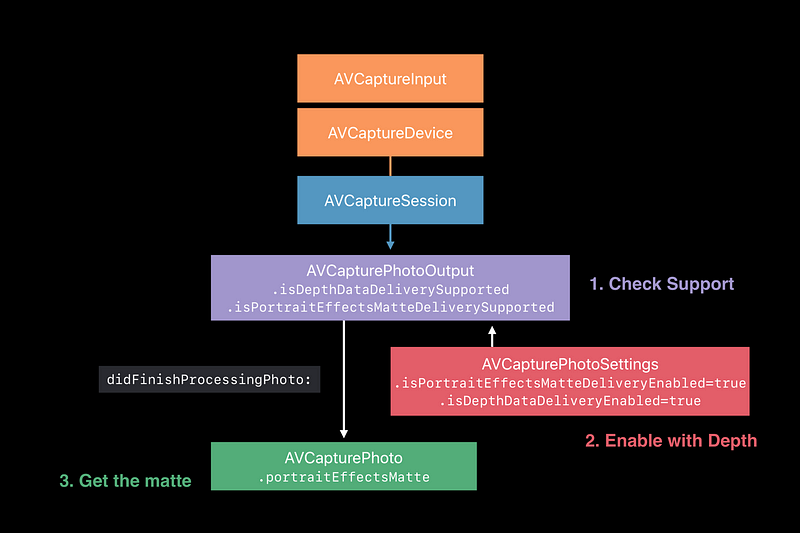

Capture

AVCapturePhoto

Loading and Saving

Sample

- Capturing Photos with Depth

- Enhancing Live Video by Leveraging TrueDepth Camera Data

- Streaming Depth Data from the TrueDepth Camera

Demo 1

Working with Depth

Demo 2

Working with the Portrait Effects Matte

Real-Time Video Effects with TrueDepth

- TrueDepth stream

- Point clouds

- Backdrop

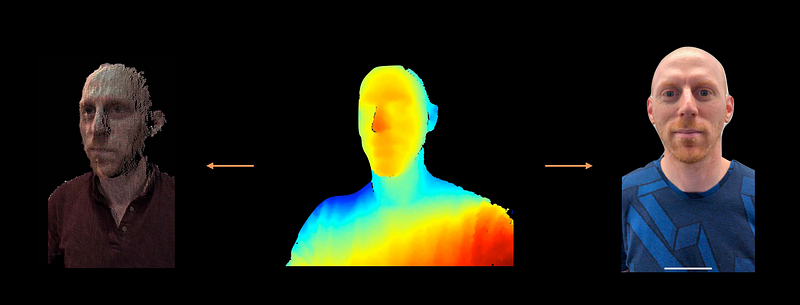

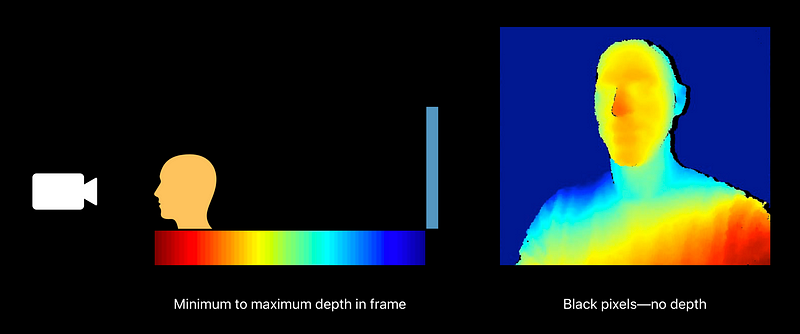

Depth Map

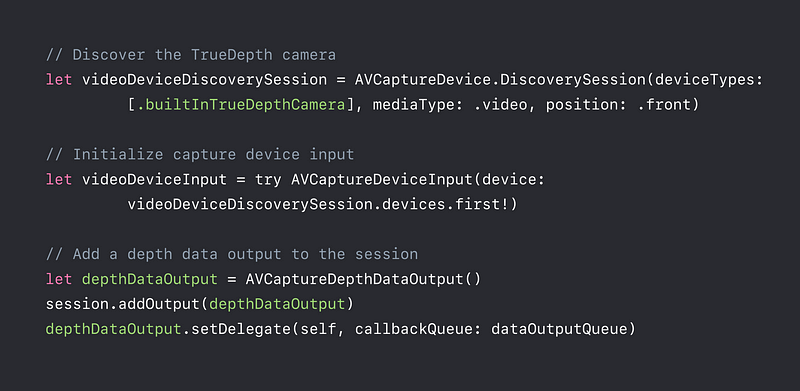

How do you add a TrueDepth stream?

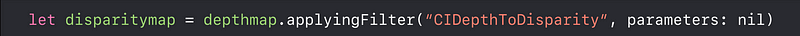

Disparity or Depth?

- Disparity usually yields better results

- Depth values are intuitive, but the error goes with Z²

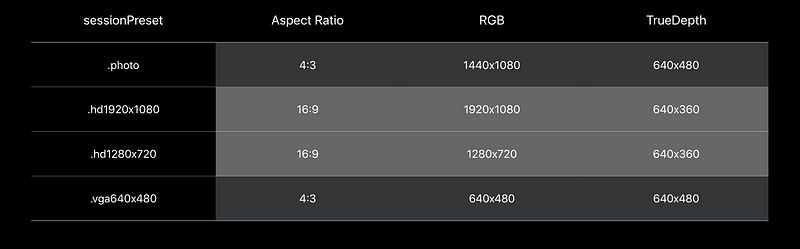

Resolution

Test System Pressure

High resolution + fast frame rate + processing + duration

AVCaptureDevice.SystemPressureState.Level- Nominal

- Fair

- Serious

- Critical

- Shutdown

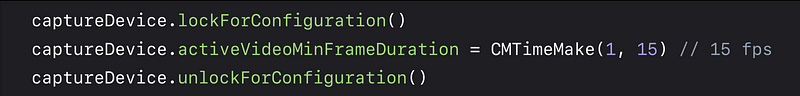

Monitor System Pressure and Adapt

Prevent AVCaptureDevice shutdown

- Reduce frame rate when level is increasing:

- Nominal (30fps) → Serious (15 fps)

- Nominal (30fps) → Fair (24 fps) → Serious (20 fps) → Critical (15 fps)

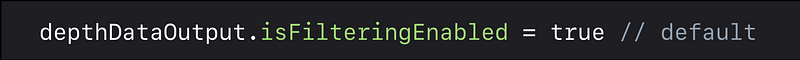

Filtered Depth Map

- Smoothed, spatially and temporally

- Holes filled, using RGB

- For photography/ segmentation applications

- iOS 12: spatial resolution of filtering improved from iOS 11

Using Raw Depth Data

- Use for point clouds, or real world measurements

- No depth value == 0 ⚠️

- Watch out for averaging / downsampling

Depth Map Holes ⚠️

- The TrueDepth camera detects most objects up to about 5 meters

- Low reflectivity materials absorb light

- Reflectivity

- Specular materials reflect light only from certain directions

- Outdoor conditions are more challenging

- Shadow from parallax between projector and camera

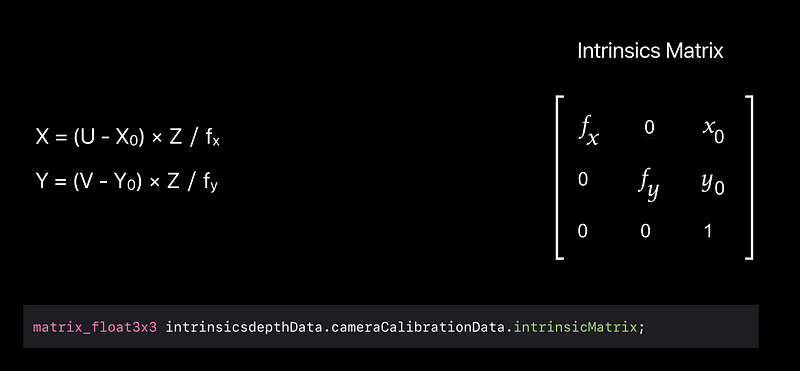

Point clouds

RGB-D

Vertex Shader

Point location

- Z = func( U, V )

- Tranform to ( X, Y, Z )

- Reproject with view matrix

Fragment Shader

Point color

- Get vertex info

- Discard holes ( Z == 0 )

- Apply color from RGB frame at vertex coordinates

Backdrop

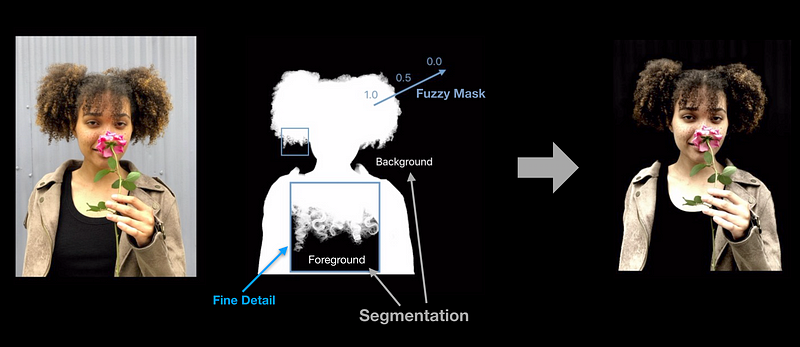

Per-Frame, Real-Time Processing

- Detect a face

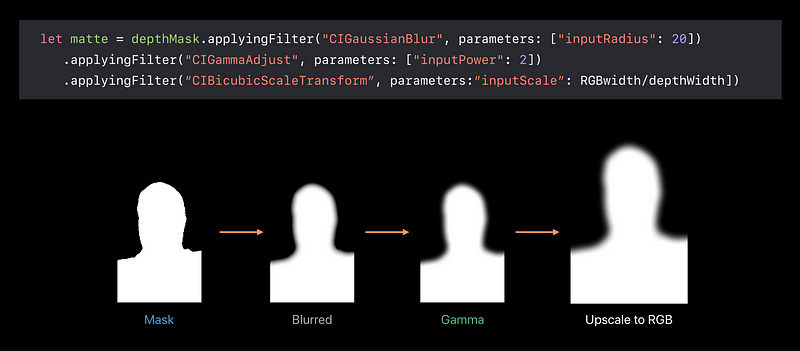

- Create binary foreground mask, smooth, and upscale

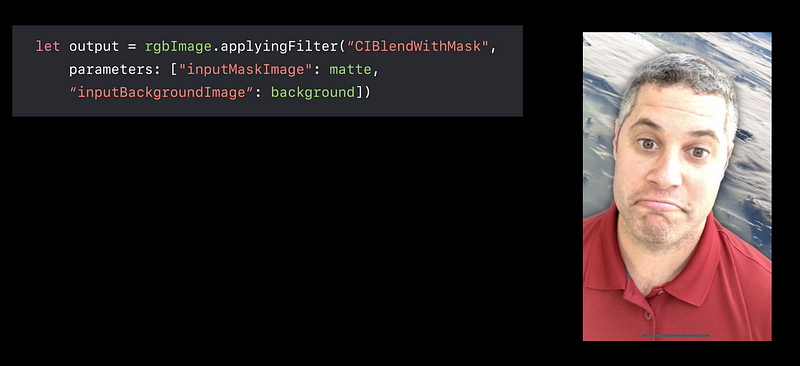

- Upscale foreground to background and blend

- Resize background to video resolution once:

- Not upscaling foreground

- Blending at lower resolution

Getting Face Metadata

- Until a face is detected, use default face depth of 0.5 meters

- Transform center of face to depth map coordinates, and get its depth

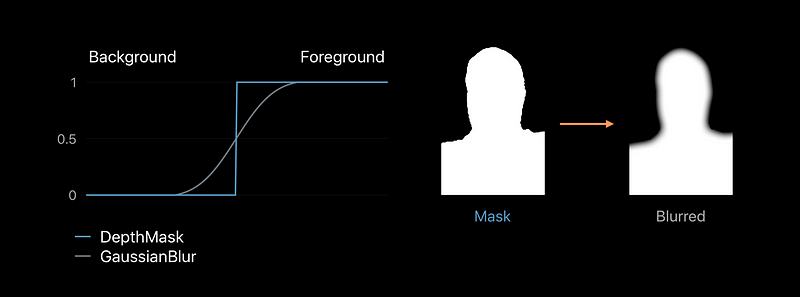

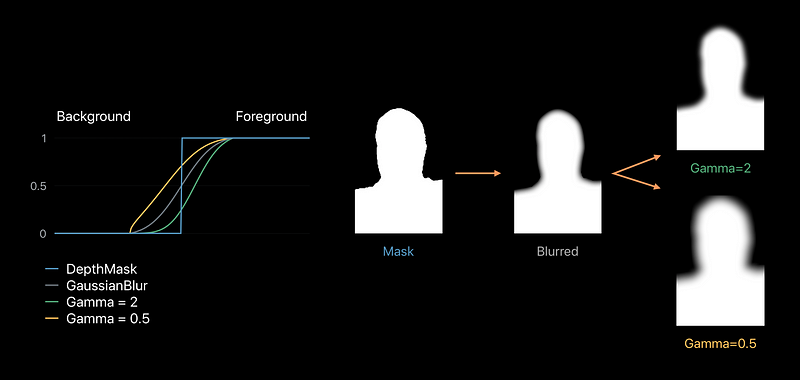

Binary Foreground Mask

- Threshold = face depth + 0.25 meters

- Binary mask:

- Foreground = 1

- Background = 0

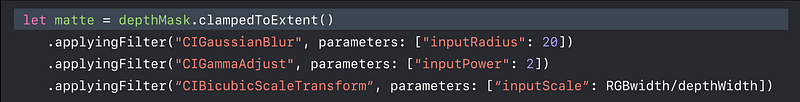

Gaussian Blur

Gamma Adjust

Binary Mask

Alpha matte

Clamp Before Filtering

Avoiding edge softening

Blending Foreground with Background

TrueDepth Stream

640 x 480 depth map registered to video at 30 fps